I have taken a spoon and scooped out the part of my brain that learned those 3 languages very long ago.

However, I cannot even begin to list all the compiler languages, scripting programs, meta-languages and implementations of lambda calculus with various cryptic names that have since exploded into existence. I wonder, why this has to be?

It seems that once I learned the 4th or 5th assembly language (finally for 64 bit Intel) that I've had it with those. Also, way back when there were only a few flavors of C (always bastardized for some specific company like Microsoft, DEC, etc.), I could have just settled with C. In fact I prefer C even today. Concise, no bullshit, fast.

But, no. There had to be every Tom, Dick and Harry's version of re-implemented-smalltalk-like-pascal-superscript-lisp-forth-C++. In addition to many C++ versions there is C# (and 3 different ones, too!). There are so many languages I can only refer to Wikipedia for a relatively comprehensive list of "famous" languages.

There are, unfortunately, many "unfamous" languages that were designed in-house for various corporations or even for specific departments within corporations, or even for specific people. The reason for this is not clear, but I would bet that it has to do with the phenomena that it is easier for some people to learn something by re-inventing it.

I think there is also another factor, one that is very apparent with JavaScript and various networking languages -- security. The only way security can be maintained is to write a new language that implements whatever essential subset of instructions or functions must be there, and leaves out the unneeded or dangerous ones. Thus there are dozens of half-JavaScripts out there that implement everything except the ability to actually use them in real web pages. That way there are no security problems.

This is similar in nature to JavaScript itself (which is a misnomer anyway -- it is EcmaScript, which sounds much like a skin rash to my ears) which leaves out all the file I/O, process controls and other things that can render a virus or worm in the networked computers. Despite this, various viruses and worms were written in JavaScript anyway.

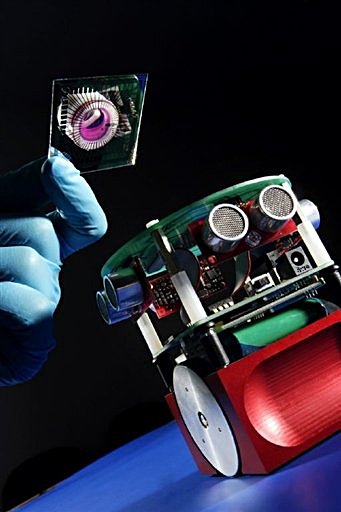

So, there are all kinds of languages -- at least one for every 2 or 3 programmers. I've written my own languages (3 in total), although I would never expect them to still exist after all these years. They were specific to certain manufactured robots anyway, so they would not be useful for anything more general. One was called ZMAT, which is all I need to remember about it.

I have also written 3 operating systems. One was for a z8000 system (ZOS), another was for a z80000 system (ZMS), and another was for a graphics processing board (GAS). But all of those have since died merciful deaths. Like most computer hardware systems, they had short life spans, so the operating systems that ran them also had short life spans.

I hope to never write another OS or another language. But even though C is just fine with me, or even C++ if it needs to have formality and STL, it seems that for the rest of my life (probably not excessively long, now) I might still have to use dozens of other languages.

In the world of WPF (within the world of Visual Studios version whatever++) there are many subset languages, mostly C# and XAML. In other systems there are equivalent systems, although done in C++ and XML or Java and XML. I could just shoot C# in the head. It has nothing on Java (and neither does Java smell that nice), so it seems merely another Microsoft thing to jam their programming environments down our throats.

Oh, well. It's a living. Oh, wait! It's not even a living. Nobody wants to pay programmers anymore. Unless they live in India. Yet another batch of languages.